This chip is used for the GeForce FX 5800 and 5800 Ultra.

This is my second GeForce FX 5800 Ultra review-sample from nVidia. Because I always liked this card I thought it is a good idea to have a spare one.

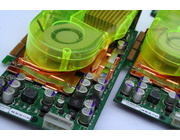

Because this card is in a better condition than the other one I keep it in the box as much as possible. The card has less fingerprints on it (which show on the copper after a few years) and the fan is still good. This card is a lot less noisy than the other one!

I also noticed that this card has a different BIOS which is probably flashed by the reviewer or person who used it after. It's dead silent at startup because the fan doesn't even spin at all. The moment a 3D-application starts up the fan starts spinning and after the 3D-application is finished it does so until the temperature is down to around 48C/49C. The fan remains silent and the GPU slowly rises in temperature. It stays at about 64C after a while in an open room and the fan doesn't spin. > Read more

In the end of 2002 the Radeon 9700 Pro was fastest card money could buy. ATi was going pretty strong with their new cards and everyone was looking forward to nVidia's answer. The GeForce FX looked good on paper but in reality the GeForce FX wasn't.

The 5800 Ultra was nVidia's top of the line model featuring DDR2-SDRAM and high clock speeds. It's also known for it's cooling which is quite loud, especially after a few years of operation when the fan worns out.

With the GeForce FX nVidia didn't get the performance crown and ATi started to get a good reputation with the 9700 Pro. The budget versions of the Radeon, the 9500 Pro, were in the back of people's mind as well. But due to nVidia's strong marketing and reasonabily going low-budget and mid-rage cards the GeForce FX sold in large quantities. Even in 2005 the GeForce FX 5200 was still being sold for budget systems!

In the beginning nVidia started with the 5200, 5600 and 5800 with their Ultra versions. After it turned out that the first revision didn't work out as should, nVidia launched cards like the 5500, 5600XT, 5700, 5700 Ultra, 5900 and 5950 Ultra. The latter of these cards had some good improvements (flip-chipped GPU that allowed 256-bit memory bus) but the slower ones were only good for 2D rather than 3D. Also the very first GeForce FX 5200 cards used, a more expensive, 128-bit memory bus but most companies sold their GeForce FX 5200 with a 64-bit memory bus. Even the GeForce FX 5600 was sold with 64-bit memory bus sometimes! Due to this the first benchmarks turned out quite well but the cards that were sold after were about 50% slower. A good 'business-trick' but not for those who actually bought the card and wanted a 'full' card with 128-bit memory bus.

The GeForce FX 5800 Ultra disappeared pretty soon and the GeForce FX 5900 (in all it's forms) took place. In the end ATi always had the better product (except for Linux-drivers) at that time untill the nVidia released the GeForce 6 series which set things right again. Not to mention the DirectX 9 performance that was a lot better compared to the 5-series. > Read more